I’ve been working with a few clients to move their email marketing to a new home after most of them outgrew the limits of what they could reliably send through their existing infrastructure / hosts. Now – marketing isn’t really my strong point, and I’m definitely no designer – however I recommended and helped move over a number of campaigns to Campaign Monitor (The other key contenders appear to be MailChimp and Constant Contact).

Campaign Monitor appealed to me because of their clear, easy to understand website, and their fantastic interactive tool for creating mailings and because they generally seemed to be a good company.

Yesterday, they rolled out a new feature, conveniently coinciding with me sending a mailing out for one of my clients. A/B Testing is something I’ve done before with web content, but never with email campaigns, so I was intrigued to find out how it would work (I was dreading segmenting my mailing list and so on …)

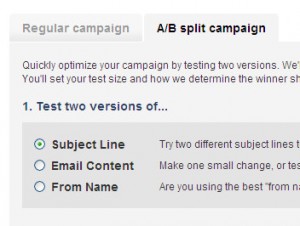

The good news is, that it’s really simple. You can choose to run different Subject lines, Email Content, or From addresses.

In my case, the functionality arrived a little late for me to create 2 designs (I’m not a designer, and even making basic changes tends to take me a while!), so I plumped for the Subject Line test.

I chose my two subject lines, picked the subscriber list it was to go to (In this case it was actually a fairly small list – around 45 recipients only) and moved to the next step.

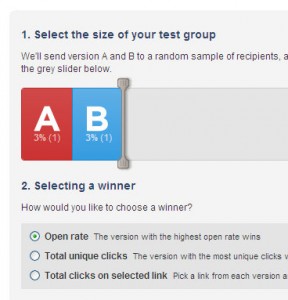

Next, I had to decide how I would pick my winner, and how many of my total list would constitute “the test”

Now here came my first problem – the default that campaign monitor chose for my “test” was just two recipients, one would receive option A, and one more would receive option B. The idea then is that based on the reaction (Which got opened more, which got the most clicks overall, which got the most clicks on a specific link) the winning version will go to the rest of the list. Now – even if I ran the test on the whole of the 45 recipients it probably wouldn’t be a statistically valid test. Certainly, I think Campaign monitor should have a higher “lower limit” on how many it suggests as the sample size.

Anyway – I upped the test size (I went for five recipients for each version which I agree isn’t ideal, but I was really just seeing how the functionality worked – this wasn’t a huge marketing exercise!)

Off the test went – I set it to run for 3 hours. There came my next problem. It became a bit apparent that I really should have chosen a bigger sample size in order to get a sensible response. Unfortunately by this stage “the test” is locked – you can’t extend it to more people – or extend the time before a winner is chosen.

I’m sure there’s probably some clear guidelines about why you shouldn’t do that sort of thing anyway (It probably invalidates the whole test, or makes hurricanes in Bolivia or something …), but it struck me that it wouldn’t have been nice to have done it anyway! I’ll certainly be using the feature on my next larger scale campaigns.

Note: MailChimp also appears to support split A/B testing of campaigns in a similar fashion – I couldn’t tell whether Constact does or doesn’t.

September 21, 2009 at 12:34 am

Hey Lee,

Thanks for the review, we’re glad you like our new feature. We’re pretty excited about it ourselves!

As you found, with a small list the tests aren’t quite as useful, but once you have a bigger chunk of subscribers, you’ll find you get some valuable insights.

Generally we’ve tried to keep things as simple as possible, so you set your options up front, and then it all just works without you needing to do anything.

We can see how it might be useful in some cases to fiddle with parameters after, but that would definitely confuse the reporting as you can imagine.

We’d love to hear how you go with testing on a bigger campaign too.